Data assimilation and inverse problems

This class covers the basics of data assimilation and inverse problems, i.e., how to merge models and data. Topics include:

- Homework 1, due September 6.

- Homework 2, due September 20.

- Homework 3, due October 18.

Obs. of a 40 dimensional system.

Obs. of a 400 dimensional system.

- Homework 4, due November 20.

- Homework 5, due January 17.

- Homework 6, due January 31.

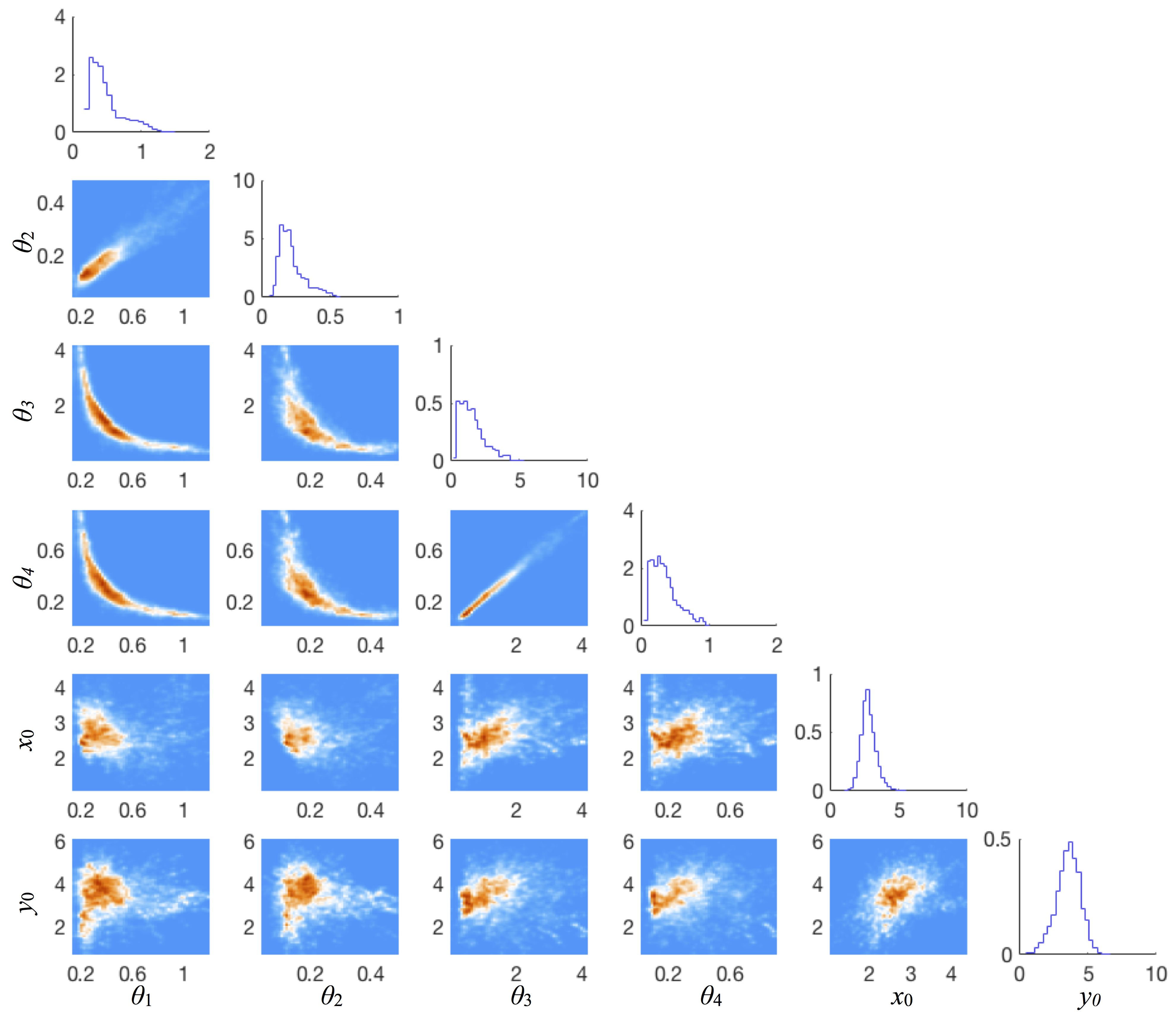

- Homework 7, due February 14. Triangle plotter: Plotter, and color map.

- Homework 8, due March 14.

- Homework 9, and data. Due TBD.

- Lecture 1: Rapid review of probability and eclipse viewing.

- Lecture 2: Rapid review of random variables.

- Lecture 3: Review of conditional probability and basic ideas of Monte Carlo.

- Lecture 4: How to draw samples from standard distributions, Kalman filter.

- Lecture 5: Discussion of Homework 1.

- Lecture 6: Kalman filter.

- Lecture 7: EnKF (perturbed obs).

- Lecture 8: Kalman filter homework discussion, more EnKF.

- Lecture 9: HPC workshop. Slides

- Lecture 10: EnKF (square root).

- Lecture 11: EnKF in practice: Localization.

- Lecture 12: EnKF in practice: Localization, inflation, tuning.

- Lecture 13: EnKF and BLUE and nonlinear problems. Rapid refresher of numerical optimization.

- Lecture 14: Guest lecture (Travis): LETKF. Slides

- Lecture 15: Gauss-Newton and connections with Kalman gain

- Lecture 16: 4D-Var for nonlinear problems

- Lecture 17: EnKF Homework discussion

- Lecture 18: Cycling 4D-Var

- Lecture 19: E4DVar

- Lecture 20: EDA and RTO

- Lecture 21: Introduction to importance sampling

- Lecture 22: How to construct "good" proposal distributions

- Lecture 23: Review of 4DVar and the optimal proposal distribution

- Lecture 24: 4DVar homework discussion

- Lecture 25: 4DVar homework discussion (continued) Stans notes on derivatives

- Lecture 26: Importance sampling, effective sample size

- Lecture 27: Importance sampling, effective sample size, resampling

- Lecture 28: Review, small noise theory for Gaussian proposal

- Lecture 29: Discussion of Homework 5

- Lecture 30: Non-Gaussian proposals: symmetrization and small noise analysis

- Lecture 31: Non-Gaussian proposals: random map, part 1 of 2.

- Lecture 32: Non-Gaussian proposals: random map, part 2 of 2.

- Lecture 33: Limitations of sampling in high dimensions.

- Lecture 34: Particle filters: target distributions

- Lecture 35: Immigrant student resource center and particle filters: family of proposal distributions

- Lecture 36: Homework 7 discussion

- Lecture 37: Standard particle filter part 1 of 2

- Lecture 38: Standard particle filter part 2 of 2

- Lecture 39: Optimal particle filter part 1 of 2

- Lecture 40: Optimal particle filter part 2 of 2

- Lecture 41: Inverse problems and reality check

- Lecture 42: Homework 8 discussion

- Lecture 43: Guest lecture: Peter Jan van Leuween

- Lecture 44: Summary and discussion of particle filters

- Lecture 45: MCMC basics

- Lecture 46: Metropolis-Hastings, random walk Metropolis

- Lecture 47: How is my MCMC sampler doing? Integrated auto correlation time and effective sample size

- Lecture 48: Function space MCMC: pCN

- Lecture 49: Gibbs sampling

- Lecture 50: Guest lecture by Ave

- Lecture 51: Guest lecture by Ave

- Lecture 52: Langevin, MALA and Hamiltonian MCMC

- Lecture 53: Homework 9 discussion

- Lecture 54: Final project presentations 1/2

- Lecture 55: Final project presentations 2/2

Probability, random variables, textbooks

-

A.J. Chorin and O.H. Hald,

Stochastic Tools in Mathematics and Science (3rd Edition).

Springer, 2013

-

S. Reich and C. Cotter,

Probabilistic Forecasting and Bayesian Estimation.

Cambridge University Press, 2015

-

M. Bocquet, M. Asch, and M. Nodet,

Data Assimilation: Methods, Algorithms, and Applications.

SIAM, 2016

-

K. Law, A. Stuart, K. Zygalakis

Data Assimilation: A Mathematical Introduction

Springer, 2015

- Art Owen's lecture notes on MCMC

EnKF

-

G. Burgers, P.J. van Leeuwen, and G. Evensen,

Analysis scheme in the ensemble Kalman filter,

Monthly Weather Review Vol. 126, pp. 1719--1724, 1998.

-

P.L. Houtekammer and F. Zhang,

Review of the ensemble Kalman filter for atmospheric data assimilation,

Monthly Weather Review Vol. 144, pp. 4489--4532, 2016.

-

M.K. Tippett, J.L. Anderson, C.H. Bishop, T.M. Hamill, and J.S. Whitaker,

Ensemble Square Root Filters,

Monthly Weather Review, Vol. 131, pp. 1485--1490, 2003.

-

B.R. Hunt, E.J. Kostelich, and I. Szunyogh,

Efficient data assimilation for spatiotemporal chaos: A local ensemble transform Kalman filter,

Physica D: Nonlinear Phenomena, Vol. 230 (1--2), pp. 112--126, 2007.

-

G. Evensen,

Data assimilation: the ensemble Kalman filter

Second edition, Springer, 2009.

-

G. Gaspari and S.E. Cohn,

Construction of correlation functions in two and three dimensions

Quarterly Journal of the Royal Meteorological Society, Vol. 125 (554), pp. 723--757, 1999.

-

T.M. Hamill, J.S. Whitaker, J.L. Anderson, and C. Snyder,

Comments on "Sigma-point Kalman filter data assimilation methods for strongly nonlinear systems",

Journal of the Atmospheric Sciences, Vol. 66, pp. 3498--3500, 2009.

Variational methods

-

O. Talagrand, P. Courtier,

Variational assimilation of meteorological observations with the adjoint vorticity equation. I: Theory,

Quarterly Journal of the Royal Meteorological Society 113(478): 1311–1328, 1987.

-

Chapter 2 of:

A. Fournier, G. Hulot, D. Jault, W. Kuang, A. Tangborn, N. Gillet , E. Canet, J. Aubert, F. Lhuillier,

An introduction to data assimilation and predictability in geomagnetism,

Space Science Review 155, pp. 247–291, 2010.

-

J. Poterjoy, F. Zhang,

Systematic comparison of four-dimensional data assimilation methods with and without the tangent linear model using hybrid background error covariance: E4DVar versus 4DEnVar, Monthly Weather Review 143(5): 1601–1621, 2015.

-

J. Bardsley, A. Solonen, H. Haario, M. Laine,

Randomize-then-optimize: A method for sampling from posterior distributions in nonlinear inverse problems,

SIAM Journal on Scientific Computing 36(4): A1895–A1910, 2014.

Importance sampling

-

D.J.C. MacKay,

Introduction to Monte Carlo Methods,

in "Learning in Graphical Models", NATO Science Series 89: 175-204, 1998

- Jonathan Goodman's lecture notes.

-

J. Goodman, K. Lin, M. Morzfeld,

Small-noise analysis and symmetrization of implicit Monte Carlo samplers,

Communications in Pure and Applied Mathematics 69: 1924-1951, 2016.

-

M. Morzfeld, X. Tu, E. Atkins, A.J. Chorin

A random map implementation of implicit filtlers,

Journal of Computational Physics 231: 2049-2066, 2012.

-

M. Morzfeld, X. Tu, J. Wilkening, A.J. Chorin

Implicit sampling for parameter estimation,

Communications in Applied Mathematics and Computational Science 10(2): 205–225, 2015.

-

C. Snyder, P. Bengtsson, M. Morzfeld

Performance bounds for particle filters using the optimal proposal,

Monthly Weather Review 143: 4750–4761, 2015.

-

S. Agapiou, O. Papaspiliopoulos, D. Sanz-Alonso, and A. M. Stuart

Importance sampling: intrinsic dimension and computational cost,

Statistical Science, 32 (3), 405-431, 2017.

-

M. Morzfeld and D. Hodyss

What the collapse of the ensemble Kalman filter tells us about particle filters,

Tellus A: Dynamic Meteorology and Oceanography, 69:1, 1283809 (2017).

-

A.J. Chorin, F. Lu, R.N. Miller, M. Morzfeld, and X. Tu

Sampling, feasibility, and priors in data assimilation,

Discrete and Continuous Dynamical Systems 36(8),

special issue dedicated to Peter D. Lax on the occasion of his ninetieth birthday, (2016).

-

J. Poterjoy

A localized particle filter for high-dimensional nonlinear systems,

Monthly Weather Review 144 (1), 59-76, 2016.

-

J. Poterjoy, J.L. Anderson

Efficient assimilation of simulated observations in a high-dimensional geophysical system using a localized particle filter

Monthly Weather Review 144 (5), 2007-2020, 2016.

-

J. Poterjoy, R.A. Sobash, J.L. Anderson

Convective-scale data assimilation for the weather research and forecasting model using the local particle filter

Monthly Weather Review 145 (5), 1897-1918, 2017.

-

R. van Handel, P. Rebeschini,

Can local particle filters beat the curse of dimensionality?

Annals of Applied Probability 25, 2809-2866, 2015.

-

M. S. Arulampalam, S. Maskell, N. Gordon and T. Clapp,

A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking

IEEE Transactions on Signal Processing, vol. 50(2), 174-188, 2002.

-

M. Ades, P.J. van Leeuwen,

The equivalent-weights particle filter in a high-dimensional system

Quarterly Journal of the Royal Meteorological Society, Vol.141(687), 484-503, 2015.

-

M. Morzfeld, D. Hodyss, J. Poterjoy,

Variational particle smoothers and their localization

Quarterly Journal of the Royal Meteorological Society, in Press, 2018.

MCMC

- Jonathan Goodman's lecture notes.

- Alan Sokal's lecture notes.

-

W.R. Gilks, S. Richardson, D.J. Spiegelhalter (editors)

Markov Chain Monte Carlo in Practice,

Springer-Science+Business Media, 1996.

-

Steve Brooks, Andrew Gelman, Galin L. Jones and Xiao-Li Meng (editors)

Handbook of Markov Chain Monte Carlo,

Chapman and Hall/CRC, 2010.

-

R.M. Neal

MCMC Using Hamiltonian Dynamics

Chapter 5 in: Handbook of Markov Chain Monte Carlo,

Chapman and Hall/CRC, 2010.

-

G.O. Roberts and J.S. Rosenthal

Optimal scaling of discrete approximations to Langevin diffusions

Journal of the Royal Statistical Society: Series B, 60, 255-268, 1998.

-

S.L. Cotter, G.O. Roberts, A.M. Stuart and D. White

MCMC methods for functions: modifying old algorithms to make them faster

Statistical Science, 28(3), 424–446, 2013.

-

A. Beskos, N. Pillai, G. Roberts, J.-M. Sans-Serna and A. Stuart

Optimal tuning of the Hybrid Monte-Carlo Algorithm

Bernoulli, 19(5A), 1501-1534, 2013.

-

U. Wolff

Monte Carlo errors with less errors

Computer Physics Communications, 156, 143-153, 2004.

Contact Matti:

Office: ENR2 S331

Email: mmo [at] math [dot] arizona [dot] edu

Office hours: M 3-4, T 1-3

Contact Philip Strzelecki (TA):

Office: ENR2 , Southeast side of 3rd floor

Email: philstrzelecki [@] math [dot] arizona [dot] edu

Office hours: TBD